-

- Downloads

Update ConfusionMatrix.png, inceptionv3.ipynb, acc_train.txt, acc_valid.txt,...

Update ConfusionMatrix.png, inceptionv3.ipynb, acc_train.txt, acc_valid.txt, helper_evaluation.py, helper_plotting.py, loss_train.txt, loss_valid.txt, stats.txt

Showing

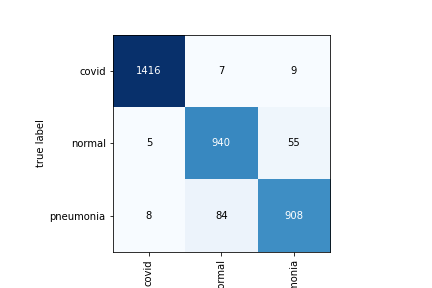

- ConfusionMatrix.png 0 additions, 0 deletionsConfusionMatrix.png

- acc_train.txt 1 addition, 0 deletionsacc_train.txt

- acc_valid.txt 1 addition, 0 deletionsacc_valid.txt

- helper_evaluation.py 78 additions, 0 deletionshelper_evaluation.py

- helper_plotting.py 190 additions, 0 deletionshelper_plotting.py

- inceptionv3.ipynb 1889 additions, 0 deletionsinceptionv3.ipynb

- loss_train.txt 1 addition, 0 deletionsloss_train.txt

- loss_valid.txt 1 addition, 0 deletionsloss_valid.txt

- stats.txt 346 additions, 0 deletionsstats.txt

ConfusionMatrix.png

0 → 100644

7.46 KiB

acc_train.txt

0 → 100644

acc_valid.txt

0 → 100644

helper_evaluation.py

0 → 100644

helper_plotting.py

0 → 100644

inceptionv3.ipynb

0 → 100644

This diff is collapsed.

loss_train.txt

0 → 100644

loss_valid.txt

0 → 100644

stats.txt

0 → 100644